In conjunction with SDM, LzBatch™ provides support for executing legacy mainframe batch jobs in a modern x86-Linux environment. LzBatch provides binary-compatible execution for Batch applications written in COBOL, PL/1 or Assembler. This means that an application can run without recompilation in a Linux environment based on x86 hard¬ware. Standard mainframe Job Control Language (JCL) and REXX support is provided, enabling Batch jobs to be submitted locally to the SDM or, for example, via NJE-connected (Network Job Entry) mainframes. An instance of SDM can operate as part of an NJE network that both sends and receives workload from any other node in the network. Consequently, existing legacy mainframe batch workload can be re-routed to run on SDM by the simple addition of a JCL routing card.

Batch jobs in a legacy mainframe environment were usually associated with a particular class of work. The operating system’s job scheduling component then scheduled this work into legacy mainframe initiators defined to support the particular class. These initiators may have been allocated different performance resources as defined by the operations team. In most mainframe organizations, batch jobs were submitted locally through individuals using TSO or by an automated job scheduling system, which may have instructions to execute jobs in a particular order or at a particular time of day. LzBatch provides support for class-based batch workload scheduling in the SDM and this workload can be distributed across multiple instances of the SDM. When used in conjunction with a job scheduling solution, such as SMA Solutions OpCon, sophisticated distributed and coordinated workflow processing can be defined.

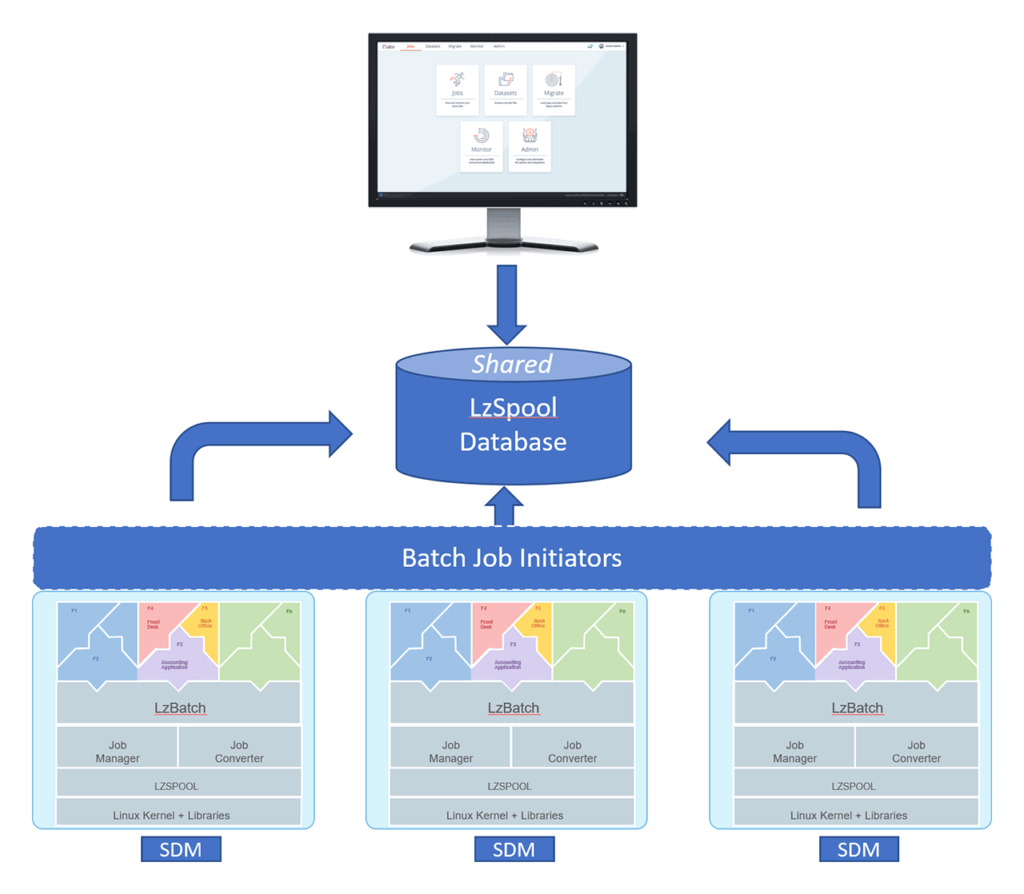

Scaling Batch Workload – An SDM Cluster

SDM is designed to leverage the scale-out architecture of modern IT infrastructures. A clustered environment can be created from multiple instances of SDM running on multiple servers, or multiple containers running in a cloud infrastructure. Each SDM can be assigned specific workload by utilizing traditional mainframe initiator classes and priorities. As long as an instance of SDM has a Job Manager/Converter functions running, it can execute batch workload according to the initiator and class configuration specifications. (See Figure 1) Each instance of SDM can be identified using a legacy SYSID from the mainframe world. This is not strictly required but may make it easier for organizations to transition from their existing mainframe structure or add an SDM instance to process batch workload in parallel with existing environments. This also enables a single instance of the LzLabs Management Console to manage the horizontally scaled batch environment as a single unified cluster.

Some batch jobs may need to run on a particular SDM instance because of data location. The traditional system affinity (SYSAFF) JCL parameter is supported for this purpose. Printed output can be routed to a single instance of SDM creating a “shared spool” environment.

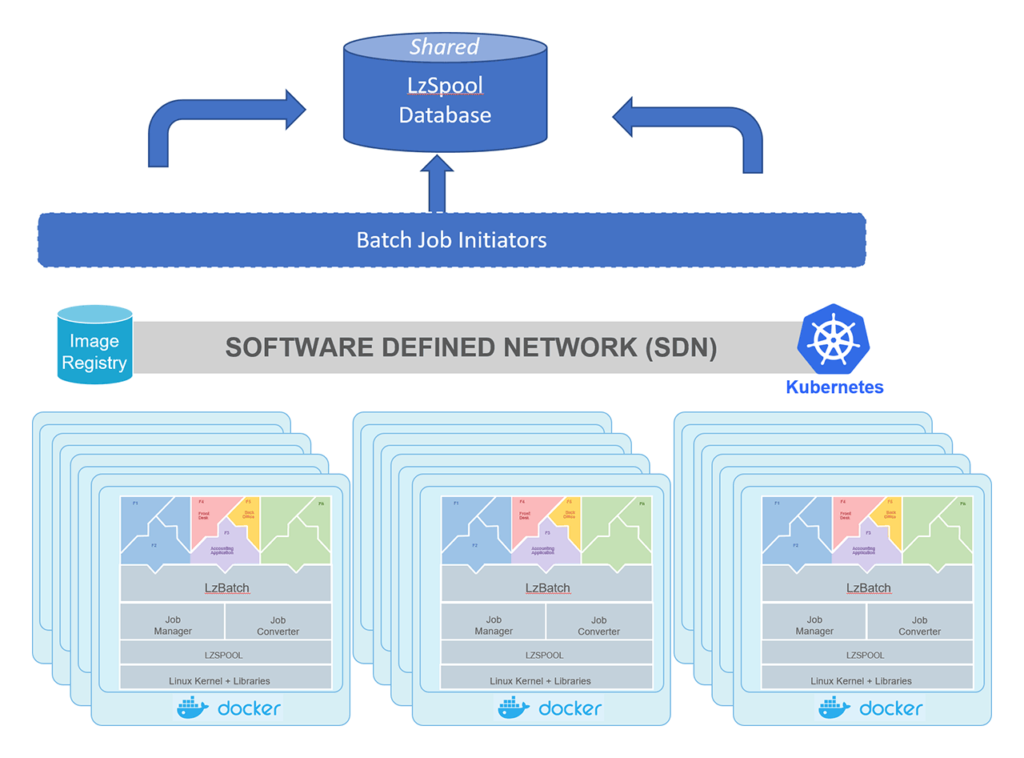

Batch Workload and Containers

As SDM continues to evolve, an organization can leverage containers in a cloud infrastructure deployment model to spin up instances of SDM that run batch workload and then terminate them when that workload is complete. For example, Docker containers could be orchestrated by Kubernetes on an OpenShift cluster. (See Figure 2)

This provides a much more flexible approach than a traditional mainframe model. The notion of spinning up instances of SDM to support batch workload can be a big help when maintaining or enhancing programs migrated to SDM. Batch processes could be spun up to compile programs as well as to run test suites to support verification of the change.

This is a perfect example of the key defining principle behind the Software Defined Mainframe – “Just enough mainframe, and no more”. In all other ways we leverage modern infrastructure and deployment technology to support the execution of mainframe workload. We provide functional equivalence of “just enough” of the mainframe to enable applications to run in their legacy binary form but, in all other ways, leverage modern deployment models. This approach reduces the risk of migration while providing an iterative way to gain the benefits of an open, scale-out environment.